Beyond Accuracy: Understanding Precision and Recall in Machine Learning

De-mystifiying Precision and Recall

Precision-Recall: The Twin Performance Evaluation Metrics

Precision and Recall are two important evaluation metrics used in evaluating the performance of machine learning models. It is mostly used to evaluate classification models.

Precision is what measures the percentage of positive predictions that are correct:

precision = TP/(TP+FP)- TP (true positives): The number of positive cases that were correctly predicted as positive.

- FP (false positives): The number of negative cases that were incorrectly predicted as positive

While Recall is what measures the percentage of actual positive cases that were correctly predicted as positive

Recall = TP/(TP+FN)- FN (false negatives): The number of positive cases that where incorrectly predicted as negative.

A Spam and Non-spam Email Classification Model

I will make a better explain using a Spam Email Classifier that classifies emails as ‘spam’ or ‘non-spam’.

You created classifier model whose purpose is to classify an email as spam or non-spam. Your confusion matrix table shows you the number of true positives, true negatives, false positives and false negatives your model predicted.

A confusion matrix table:

positive negative

-------- --------

positive TP FP

negative FN TN- TP (True positives): Would be the number of spam emails your model correctly classified as spam.

- TN (True negatives): Would be the number of non-spam emails your model correctly classified as non-spam.

- FP (False positives): Is the number of non-spam emails wrongly classified as spam.

- FN (False negatives): Is the number of spam emails wrongly classified as not-spam.

Your spam classifier model was given 60,000 emails and the result were as follows:

Actual

positive negative

-------- --------

positive TP=49057 FP=4022 | 53079

Predicted

negative FN=1825 TN=5096 | 6921

50882 9118Actual is the actual number of spam and non-spam emails that were given

Predicted is the number of spam and non-spam emails that your model predicted

To break that down:

- 49057 emails out of 53079 spam emails were correctly classified as spam

- 5096 emails out of 6921 non-spam were correctly classified as non-spam

- 4022 spam emails were incorrectly classified as non-spam

- 1825 non-spam emails were incorrectly classified as spam

So your precision and recall scores will be:

precision score = TP/(TP+FP) = 49057/(49057+4022) = 0.92

recall score = TP/(TP+FN) = 49057/(49057+1825) = 0.96Looking at our results 92% for precision and 96% for recall are pretty high results, you should also note that the data used in our example is arbitrary and does not reflect practical results.

Another thing to note is our example has a lot more spam samples than non-spam, which makes the dataset heavily imbalanced.

The Precision-Recall Trade-off

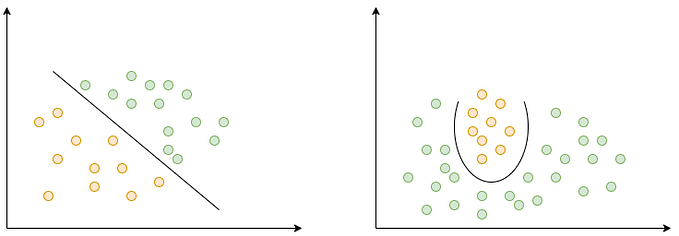

The precision-recall trade-off means, the choice of having a higher precision will mostly result in a lower recall and vice-versa, having a higher recall will result in lower precision.

The classification model tries to find an optimal balance between the precision and recall, but sometimes you may need to adjust the precision-recall trade-off for different use cases.

How you set your model for a higher precision or recall is determined by a set threshold where each email sample is given a score by your model and based on that score classifies the mail as spam or non-spam.

More on the threshold and the score later on.

Using a set threshold, your classifier computes a score for every email sample it receives based on a decision function. Decision function is a function that takes an input data point and output a prediction label (the score) of the data point, the decision function uses patterns and relationships it observed from the features of your spam and non-spam emails.

If the score is greater than the threshold, it assigns the instance to a positive class, otherwise, it assigns it to a negative class, hence true positives and true negatives.

The Threshold could be seen as a mark or boundary used to classify the data point in this case, the score as positive or negative. It is used to set the level of trade-off you wish to set between your precision and recall.

The following plot showcases the idea of precision-recall trade-off:

The Problem with Precision and Recall

Precision-Recall might be good evaluators for your models performance but it is important to know their weakness.

A high precision indicates that the model is good at identifying true positives, but those do not tell us how many true positives it misses, ie the number of spam emails it failed to identify, meaning you never know how many spam emails you didn’t detect.

A high recall indicates that the model is good at identifying all of the true positives, but doesn’t tell us how many false positives the model produces, the number of emails wrongly identified as spam.

It is often desirable to have high precision and recall but there’s always a trade-off between the precision and recall

With that, you can now understand what precision-recall is and how to interpret it.

Which is more important, precision or recall?

The importance of precision and recall depends on the specific application. For example, in some cases, it is more important to have high precision, such as when a machine learning model is used to classify medical diagnoses. In other cases, it is more important to have high recall, such as when a machine learning model is used to classify fraudulent transactions.

How do you now decide on the most optimal precision-recall threshold to choose?

Some models do self-earn and automatically choose a threshold for themselves while some will need the threshold to be set manually.

A couple of techniques are:

- Selecting a threshold using the precision-recall curve: the following is a precision-recal curve

- Hold-out test set

- Grid-search optimization. Etc

These are beyond the scope of this article so I won’t go into detail.

How to improve precision and recall

There are a number of ways to improve precision and recall, such as:

- Collect more representative data: The more data that is available, the better the machine learning model will be able to learn the patterns in the data.

- Use better features: The features that are used to train the machine learning model can have a significant impact on its performance.

- Use a different machine learning algorithm: There are many different machine learning algorithms available, and some algorithms may be better suited for specific applications than others.

- Tune the hyperparameters of the machine learning algorithm: The hyperparameters of a machine learning algorithm can have a significant impact on its performance.

Conclusion

By understanding precision and recall and how to improve it, you can create more accurate and reliable machine-learning models, It is also important to understand that the quality of your data massively impacts the accuracy of your models.

Thank You.

Feel free to look me up on X @BenjOkezie